The Center for Artificial Intelligence in Business

The Center for Artificial Intelligence in Business conducts research and outreach in order to realize the potential of AI through intentional design and governance frameworks centering on human judgment and creativity, working in conjunction with the university’s Artificial Intelligence Interdisciplinary Institute at Maryland (AIM).

Mission: To facilitate the creation of amazing and safe products and services through intentional AI-enabled design and governance frameworks

Transforming teaching, research and the practice of business

AI is already transforming the way people work, and presents major threats and opportunities for business and society.

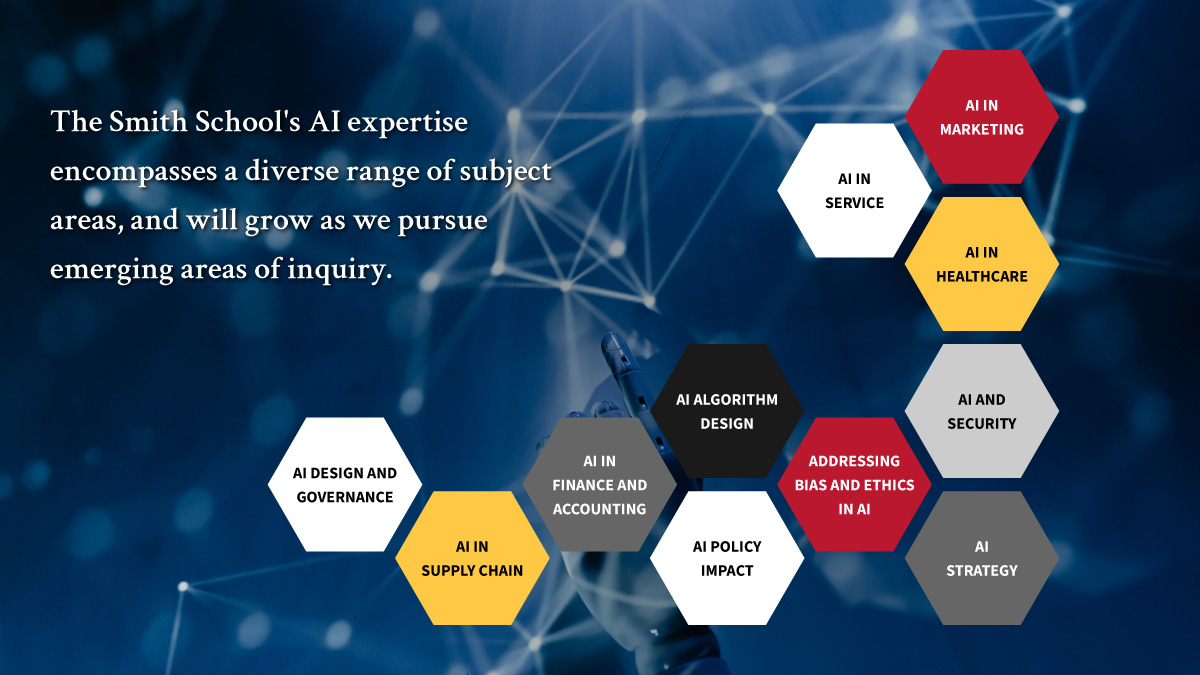

At the Smith School, our robust research enterprise explores the capabilities of AI as well as the careful design of incentives and penalties, governance and management strategies, the balancing of short-term and long-term interests, and the future of labor itself. Our learnings can guide industry and government in the responsible and effective use of these new tools for those seeking to formulate a winning AI strategy.

Free Online Certificate in Artificial Intelligence and Career Empowerment

Ready to pivot your career with purpose? This free course from the Robert H. Smith School of Business explores how AI is reshaping industries—and your future. Learn from top professors and industry experts, dive into real-world use cases in marketing, tech, and more, and walk away with practical tools to navigate change, seize new opportunities, and thrive in an AI-driven world.

In the classroom, we also lead the way in teaching generative and contextual AI across undergraduate and graduate programs. Students learn how to assess and mitigate the bias in the underlying data and the resulting AI models, track the provenance of data that is training large language models, design jobs and workflows that help workers leverage human capabilities such as empathy, creativity, judgment and leadership in concert with capabilities of AI systems and models and design AI systems to match the role that they will play for a given task.

The CAIB Artificial Intelligence Research Student Internship Program

The program trains and develops the next generation of leaders in various fields related to AI, its governance, and its use for research purposes. The program offers research opportunities and diverse training on artificial intelligence that build on the strengths of graduate and undergraduate education at the University of Maryland. A key aspect of the internship is the work carried out closely with a supervisor, complemented by a bi-monthly workshop series that offers training on essential AI skills and opportunities to exchange and discuss progress.

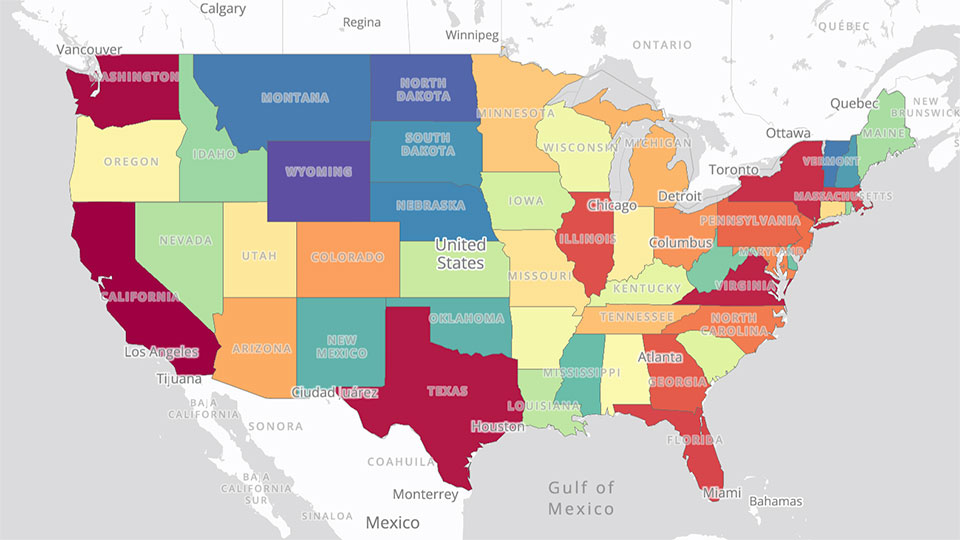

Smith School Launches UMD-AI LinkUp Maps

This dynamic new tool uses job posting data to analyze the spread of jobs requiring AI skills across the country – by sector, state and more granular geographic levels.

Research and Thought Leadership

The Smith School faculty have always made it their priority to stay ahead of emerging technologies as they pertain to business, and AI has shown itself to be applicable to every facet of every industry. From professional baseball to robots to equitable pricing, our top-ranked researchers are working hard to uncover all of the incredible uses AI can serve.

AI has always been about ‘intelligence,’ and it is particularly exciting that we are at a point in time where we can realize all this potential for business and society. This will require a level of understanding of AI that most business schools’ MBA curricula currently aren't equipped to provide, but the Smith School’s is.

Balaji Padmanabhan

Center Director and Dean's Professor of Decisions, Operations & Information Technologies

Upcoming Events

There are no upcoming events at this time.

AI in Business Education

During this Poets & Quants panel, Professor Padmanabhan described Smith's approach to addressing all of the important areas that MBA students need to be aware of regarding AI: capabilities, design, applications and governance.

When asked how AI will impact recruiting, he shared that MBA students should be thinking about how skills can be demonstrated as AI will help companies have more sophisticated screening in applications beyond scanning a static resume.

Smith professor Sean Cao, director of the Smith School's AI Initiative for Capital Market Research, has written a free textbook that simplifies AI for business students. Focusing on data application in accounting and finance, it minimizes programming requirements, making AI more accessible. This book supports both students and firms in understanding practical AI use cases.

Read more: New Textbook Gives Businesses a Roadmap for Using AI

AI Initiative for Capital Market Research

The AI Initiative for Capital Market Research aims to enhance the practical application of AI in financial organizations, accounting firms, and regulatory bodies.

With seed funding from GRF CPAs and Advisors, the initiative has developed a free-to-download textbook covering how unstructured financial data and AI can be leveraged for accounting and finance applications. Initiative director and textbook co-author, Professor Sean Cao, has emphasized the importance of these topics for those outside of the computer science discipline and aims to make these skills accessible through this text and its accompanying video resources.

AI Use and Regulation: A Survey of U.S. Business Executives

A recent report from professor Kislaya Prasad, current academic director of Smith's Center for Global Business, surveyed U.S.-based business execs, their attitudes toward AI adoption in their industries, and how they view AI regulation.

5 Key Takeaways:

1. People are concerned about job displacement. Younger executives and those more closely involved with AI are more concerned about the impact on their careers.

2. There is strong support for AI regulation. Asked about three types of AI regulation – transparency about AI use, explainability of autonomous decisions, and third-party auditing for bias in algorithms – respondents expressed strong support, although support for mandating explainability was slightly lower.

3. Restrictions on export of key AI technologies are favored by respondents in the Manufacturing sector. Respondents from companies with greater global sales favor export restrictions.

4. Generative AI is being widely used across sectors. The two most important uses overall are to power chatbots, and in marketing. In the Tech sector, another important use is for coding. After GenAI, machine learning and computer vision are the next most important applications.

5. Improving the customer experience and improving operations are the key drivers of AI adoption. Major reasons given for not adopting AI technologies were an absence of a clear use case or perceived need and limited technical expertise or resources.

What We’re Working On

Browse this section of research abstracts from important research our faculty and their affiliates are doing in AI spaces.

In this project, we focus on using AI to detect adverse events in drug development. Traditionally, pharmaceutical companies relied on human experts to review patient feedback to identify adverse events, which could be inefficient. Recently, some companies have incorporated AI to automate part of this process and assist human experts in making final decisions. In collaboration with a European-based pharmaceutical company, we propose a three-phase approach aimed at enhancing the efficiency and accuracy of their adverse event detection process. In the first phase, the AI algorithm analyzes all textual feedback and identifies risky cases. Then, a small sample of cases is drawn from those that were not highlighted by the AI. Finally, experts will assess cases from both phases and make decisions regarding adverse events. We also introduce a novel loss function for the classification algorithm to balance accuracy and the workload required for the experts.

Customer care is important for its role in relationship-building. This role has traditionally been performed by human customer agents, given the less mature feeling intelligence of AI. The emergence of interactive generative AI (GenAI) shows the potential for using AI for customer care in such emotionally charged interactions. Bridging practice and the academic literatures in marketing and computer science, this paper develops an AI-enabled customer care journey, beginning from accurate emotion recognition to empathetic response, emotional management support, and finally, the establishment of an emotional connection. Marketing requirements for each of the stages are derived from in-depth top manager interviews and a CMO survey. By juxtaposing these requirements against the current feeling capabilities of GenAI, the technological challenges that need to be tackled by engineers are highlighted. This paper wraps up with a set of marketing tenets for implementing and researching the caring machine. These marketing tenets encompass verifying emotion recognition accuracy using marketing emotion theories through multiple emotion signals and methods, utilizing prompt engineering to let customers reveal their thinking and feelings to enhance emotion understanding, employing “response engineering” for knowledge of customer preferences to personalize emotion management recommendation, and strategically deploying GenAI for emotional connection to simultaneously enhance customer emotional well-being and customer lifetime value.

Roland Rust and Ming-Hui Huang

In this paper, we examine user interactions with an AI assistant, with the goal of inferring purchasing intent. With the proliferation of ambient computing, firms have more opportunities than ever before to connect with their customers, and users are interacting with their AI assistants to do more than just shop. Here, we text analyze user-initiated interactions and identify features of utterances that predict purchasing intent. Specifically, we build a bipartite network of nouns and verbs and measure the distance of specific words to “golden” purchasing words, such as “purchase,” “buy,” or “order.” We then predict purchasing intent as a function of this distance along with other linguistic features. One challenge of this research is in measuring purchasing intent. We do this by using large language models, specifically Chat-GPT3.5, to annotate our data with a measure of purchasing intent. We validate this method by comparing the results of our analysis with Google Ads CPC, with the assumption that higher CPCs correlate with higher purchasing conversion probabilities. We find that the words used in an utterance can be mapped onto a network graph and effectively predict purchasing intent. Note that the analysis is limited to a single utterance, so no customer tracking across interactions is necessary. Additionally, we validate the ability of large language models to predict purchasing intent.

Citation: Ma, Liye, and Baohong Sun. “Machine learning and AI in marketing–Connecting computing power to human insights.” International Journal of Research in Marketing 37.3 (2020): 481-504. Abstract: Artificial intelligence (AI) agents driven by machine learning algorithms are rapidly transforming the business world, generating heightened interest from researchers. In this paper, we review and call for marketing research to leverage machine learning methods. We provide an overview of common machine learning tasks and methods, and compare them with statistical and econometric methods that marketing researchers traditionally use. We argue that machine learning methods can process largescale and unstructured data, and have flexible model structures that yield strong predictive performance. Meanwhile, such methods may lack model transparency and interpretability. We discuss salient AI-driven industry trends and practices, and review the still nascent academic marketing literature which uses machine learning methods. More importantly, we present a unified conceptual framework and a multifaceted research agenda. From five key aspects of empirical marketing research: method, data, usage, issue, and theory, we propose a number of research priorities, including extending machine learning methods and using them as core components in marketing research, using the methods to extract insights from largescale unstructured, tracking, and network data, using them in transparent fashions for descriptive, causal, and prescriptive analyses, using them to map out customer purchase journeys and develop decision support capabilities, and connecting the methods to human insights and marketing theories. Opportunities abound for machine learning methods in marketing, and we hope our multi-faceted research agenda will inspire more work in this exciting area.

We study the use and economic impact of AI technologies. We propose a new measure of firm-level AI investments using employee resumes. Our measure reveals a stark increase in AI investments across sectors. AI-investing firms experience higher growth in sales, employment, and market valuations. This growth comes primarily through increased product innovation. Our results are robust to instrumenting AI investments using firms’ exposure to universities’ supply of AI graduates. AI-powered growth concentrates among larger firms and is associated with higher industry concentration. Our results highlight that new technologies like AI can contribute to growth and superstar firms through product innovation.

Mental health care has become a concern globally, and the challenges of providing and accessing appropriate care are only magnified by a dearth of available counselors. At the same time, Large Language Models (LLMs) are anticipated to hold transformative potential for numerous industries including healthcare. LLM’s exceptional capacity to offer individually tailored recommendations derived from massive medical knowledge can facilitate access to affordable mental health services. Naturally, an inquiry arises regarding how human counselors respond to the emergence of AI counselors. We focus on an online peer counseling forum in China wherein expert and layperson counselors offer support to mental health care seekers through a question-and-answer (Q&A) forum. Several months ago, an LLM-powered chatbot was integrated into the forum to address inquiries from seekers, providing an excellent context for us to study changes in the behavior of incumbent human counselors in the presence of an AI chatbot. Leveraging the (unanticipated) introduction of the chatbot as a natural experiment, we investigate how human counselors react to the AI chatbot in terms of the level of engagement, quality of assistance and counseling strategies. This study is one of the first to examine the role of Generative AI to address challenges in providing mental health care.

The AI Impact project provides a deep understanding of the adoption and impact of AI in US federal government agencies. The past years have seen a rapidly increasing interest in public administration for artificial intelligence, machine learning, and deep learning solutions to support administrative tasks. But AI capabilities can have far-reaching consequences on citizens, and these new solutions can fundamentally alter the way governments operate. With this project, the public will have access to reliable, up-to-date information, to better track and understand AI capabilities put in place by governmental agencies. We achieve this using a two-pronged approach. First, we automatically collect longitudinal panel data to build a tracker for the adoption of AI within governmental agencies, leveraging publicly available information. Second, we use surveys and qualitative interview methods to complement our set of observations with additional information on the impact of AI adoption on civil service, citizens, and society more broadly.

Tejwansh Anand, Bertrand Stoffel, Sanjay Chawla

Artificial intelligence systems have become an integral part of our economy. Recent studies have shown how AI enhances individual worker capabilities. We have also gained a considerable understanding of how collective intelligence emerges when humans work together. Despite its potential, the capabilities of a human-AI group in solving complex problems are largely unknown. We study the collective intelligence of human-machine networks, examining 1) how the structure of human-AI teams impacts performance across different types of tasks, 2) how performance varies by the proportion of humans in the team and whether the identity of the agents is revealed, and 3) the strategies to maintain collective intelligence in human-AI systems. We make comparisons against human-only groups and characterize tasks that might be more amenable to such groups. Drawing from these findings, we highlight the important choices in the design of AI agents and their integration in human-AI groups as it relates to their collective intelligence.

We present an approach for resolving one form of algorithmic bias in hiring decisions. In many such situations, an employer uses an AI tool to evaluate applicants based on their observable attributes, while trying to avoid bias with regard to a certain protected attribute. We show that simply ignoring the protected attribute will not eliminate bias (and actually may exacerbate it) due to correlations in the data. We present a provably optimal, fair hiring policy that depends on the protected attribute functionally, but in a way that eliminates statistical dependence. The policy does not set rigid quotas, and does not withhold information from decision-makers. Both synthetic and real data indicate that the policy can greatly improve equity for underrepresented and historically marginalized groups, often with negligible loss in objective value.

An AI analyst we build to digest corporate financial information, qualitative disclosure, and macroeconomic indicators is able to beat the majority of human analysts in stock price forecasts and generate excess returns compared to following human analysts. In the contest of "man vs machine," the relative advantage of the AI Analyst is stronger when the firm is complex, and when information is high-dimensional, transparent and voluminous. Human analysts remain competitive when critical information requires institutional knowledge (such as the nature of intangible assets). The edge of AI over human analysts declines over time when analysts gain access to alternative data and to in-house AI resources. Combining AI’s computational power and the human art of understanding soft information produces the highest potential in generating accurate forecasts. Our paper portrays a future of "machine plus human" (instead of human displacement) in high-skill professions.

Growing AI readership (proxied for by machine downloads and ownership by AI-equipped investors) motivates firms to prepare filings friendlier to machine processing and to mitigate linguistic tones that are unfavorably perceived by algorithms. Loughran and McDonald (2011) and BERT available since 2018 serve as event studies supporting the attribution of the decrease in the measured negative sentiment to increased machine readership. This relationship is stronger among firms with higher benefits to (e.g., external financing needs) or lower costs (e.g., litigation risk) of sentiment management. This is the first study exploring the feedback effect on corporate disclosure in response to technology.

Artificial Intelligence applications are beginning to proliferate several industries with public policy and laws in countries around the world playing catch-up in managing the potential negative impacts of AI penetration while ensuring that technological advances are not thwarted. We are working on the use of an ethical framework initially proposed by Plato but adopted by Thomas Aquinas called “virtue ethics” to provide a foundation for managing and regulating AI. Virtue Ethics recognizes the use of practical wisdom based on principles of honesty, prudence, temperance, justice and fortitude to regulate technological advances. Our research involves examining the typology of virtue/cardinal ethics in different countries in order to extract a universalist view. In the case of social media, there is consensus on how societies view privacy, accuracy, property and access tenets of virtue ethics. In healthcare, there is a long tradition of medical ethics before AI impacted the sector. The big question is whether there is a universal global mechanism based on virtue ethics for managing and regulating AI for the future of humanity.

The objective in traditional reinforcement learning (RL) usually involves an expected value of a cost function that doesn’t include risk considerations. In our work, we consider risk-sensitive RL in two settings: one where the goal is to find a policy that optimizes the usual expected value objective while ensuring that a risk constraint is satisfied, and the other where the risk measure is the objective. We focus on policy gradient search as the solution approach. Related work considers stochastic gradient estimation in machine learning settings where the objective (e.g., the loss function used in training neural networks) may involve discontinuities.

Michael Fu and L.A. Prashanth, IIT Madras (Chennai).

By around mid-January, we plan to launch AiMaps.ai, an information website inspired by and modeled on the Johns Hopkins Covid-19 Dashboard. This project leverages Link Up’s industry-leading job posting data to visualize the spread of jobs requiring skills in AI across the country–by industry, role, and region. Among other things, the resulting interactive map allows users to track the creation of AI jobs each month, rank States by their share of those jobs, and determine a region’s “AI Intensity”: the ratio of its AI jobs to all other postings. UMD-LinkUp AI Maps is the world’s first attempt to map the creation of AI jobs. To date, a handful of research papers in academia and industry have used keywords to identify which jobs require AI skills and which do not. Our analysis indicates that this is an extremely flawed approach, with over 70% false positives. In contrast, we use a fine-tuned large language model (LLM), powered by cutting-edge AI technologies, to differentiate jobs requiring AI skills from others. When compared against manual checks by multiple AI researchers, this LLM approach has an accuracy above 90%. We exclude jobs that would be based outside the United States.

Powerful large language models are revolutionizing how we can process large volumes of unstructured data in their natural and raw text format that was previously been deemed too vast to study, opening new lines of inquiries in business, social sciences and humanities. These technologies are enabling scholars to do in-depth examinations of historical events that previously were thought to be difficult and time-consuming, revealing new insights and even providing a new framework for understanding our past. Using analytical tools including GPT 4.0’s API at scale, we transform over 280,000 PDFs and jpgs into a searchable open-source database focused on the Colombian armed conflict. This dataset will support the quantification and recording of violent incidents, victims, and organized criminal groups, thereby aiding the creation of collective memory and supporting the peace process. Simultaneously we take advantage of the data set to support multiple avenues of academic inquiry including first a study of coca eradication efforts by the Colombian government and its connection to violence and second as study of how violence spreads and moves using spatiotemporal models.

Margrét Bjarnadóttir, David Anderson (Villanova), Galia Benitez (Michigan State University)

Artificial Intelligence has seen tremendous successes over the years and is expected to lead to an era of augmented intelligence systems that can leverage both human and AI capabilities in a seamless manner. Yet, this is not without challenges and getting there requires a focus on intentional design and experimentation. This work formalizes an augmented intelligence principle regarding the effectiveness of such systems and uses this principle to (a) highlight the importance of a search for optimal designs (b) draw attention to different ways evaluation might need to be approached (c) showcase the distinctions between superiority in expectation versus universal dominance and (d) understand the inherent limitations of any such augmented intelligence design due to an inability to exhaustively search both human and AI configurations that are part of any such design. Drawing on this perspective we highlight specific elements that effective AI governance will need to take into account beyond those that are currently being presented.

AI regulation is a burning policy issue being debated around the world. A variety of regulation proposals are being considered by U.S. policymakers at both the state and federal levels. These include (1) increased transparency about decisions and content generated by AI, (2) mandating explainability, (3) mandatory third-party evaluation/auditing of AI-based systems to ensure they are free from bias based on protected personal characteristics; and (4) explicit assignment of liability for harm created by use of AI-based systems. This survey of US business professionals elicits views on the regulation of AI from a sample of individuals in the managerial ranks of U.S. companies. In addition to views about the regulatory proposals, the survey collects additional information to understand the determinants of these views.

There is an increasingly critical need for human-labeled data to refine and evaluate AI and ML models. The current state-of-the-art method for assigning such labels relies on a majority vote from three human labelers. However, this approach has limitations, particularly with controversial topics like determining whether content is toxic. This work experimentally evaluates the effectiveness of nudge-based interventions to accompany the annotation tasks commonly assigned to human labelers. The first experiment assesses the impact of information nudges on labelers’ ability to identify sexist content. These nudges increase the detection of toxic content, especially among those who are less likely to identify toxicity, while not affecting the identification of non-toxic content. The second experiment introduces empathy priming, which is found to significantly improve the identification of controversial sentences, particularly among demographic groups less likely to identify such content accurately. Our findings can aid ongoing efforts to establish dependable systems for data labeling, subsequently enhancing AI and ML models and facilitating more efficient moderation of toxic content.

Understanding the competitive landscape of public and private companies is essential for a range of decision-making tasks. Prior work has often characterized competition using inflexible industry classifications or relied on proprietary data for public companies. The almost total lack of coverage of private companies has also been a severe limitation. The Business Open Knowledge Networks (BOKN) project addresses these limitations by using a vast and untapped resource for understanding the competitor network for public and private companies: Web text. We use representation learning techniques to generate robust representations (word embeddings) of companies in a high-dimensional vector space and to accurately identify the competitor network of peers for a focal company. We evaluate the competitor network against multiple downstream applications including the following: (1) Predicting profitability; (2) Validating the self-identified competitors of a focal company; (3) Identifying In-Sector (IS) peers that occur in the same industry sector as the focal company; (4) Determining industry classification codes (NAICS) for a focal company. Our proposed approaches match or improve on the performance of prior baselines that relied on curated corpora, despite the use of noisy Web text. A use case provides insights from scenarios where companies can benefit the most from an investment in generating high-quality Web text about their products and services. We use well-studied measures from the finance literature to characterize companies. We identify the scenarios where the Web text of two companies is more likely to predict that they are In-Sector (IS) peers. The use case reflects a virtuous cycle of features that increase the likelihood of identifying IS peers, in precisely those scenarios where accurately identifying IS peers may have the greatest benefit. Example scenarios include companies that are operating in a competitive product market, a market with significant venture capital funding, or a market with product fluidity, as well as companies that target significant resources toward advertising.

The content-creation and design sectors have been early movers in deploying generative AI, with a wide variety of products and platforms now regularly incorporating GenAI in their products and services. While there is a growing interest in understanding the role of GenAI in content creation and design, it is also important to understand how the use of GenAI impacts designers’ organic (human-generated) content. This study uses a large-scale dataset from an online design-crowdsourcing platform to examine how the adoption of GenAI for design influences the novelty and popularity of designers’ organic creations. Our study employs advanced deep learning models and econometric methods to causally identify the spillover effects of designers’ use of GenAI. Our findings provide interesting insights into, the role of domain expertise and AI expertise, how those with domain expertise use GenAI differently from others, and how this further influences the novelty and popularity of their subsequent organic design creations.

Immediate, personalized feedback is essential for the writing practice of second language learners. Automated Essay Scoring (AES) systems offer a promising solution by delivering tailored scores and feedback, especially when human teachers are unavailable. However, the real-world application of AES systems is often fraught with challenges like diverse writing contexts and ambiguous grading rubrics. In this study, we leverage GPT-4 with their remarkable zero/few-shot capabilities as AES instruments, focusing on tasks with complex contexts and rubrics. Extensive experiments reveal that LLMs exhibit impressive consistency, generalizability, and interpretability in AES-related tasks. Notably, when we incorporate feedback from human evaluators, one standout advantage emerges: the in-depth feedback provided by LLMs empowers teachers to grade with greater efficiency while enabling students to sharpen their assessment skills more expertly. These insights highlight the potential for enhanced teaching methods and open doors to innovative, student-centric learning experiences, all supported by sophisticated AI.

Generative AI has put content creation in the hands of the masses. Such models are trained on large datasets comprised of media scraped from the Internet, much of which is copyrighted. These models enable the replication of the styles of individual creators – be they writers, visual artists, musicians, or actors – who have not consented to this use of their work, raising questions about fair compensation. We examine the effect of invoking an artist’s name in the text prompt used to generate an image. We use deep learning to demonstrate that doing so increases preference for the resulting image and show that doing so increases consumers’ willingness to pay for products featuring the images. We also examine how artist compensation affects consumers’ willingness to pay. Beyond quantifying the commercial value associated with using an artist’s style, we offer guidance to marketers seeking to leverage AI-generated content as to the value that consumers place on compensating the artists who contributed to the work.

AI systems have evolved fast, spurring innovation, increasing productivity and creating new value for society. Yet they also introduce new and profound challenges. We should embrace rather than resist AI, and be aware of the strengths, weaknesses, dangers and opportunities inherent in these technologies.

Prabhudev C. Konana

Dean of the Robert H. Smith School of Business

AI Symposium on Design and Governance

In January 2024 the Smith School welcomed thought leaders, preeminent researchers and industry executives to explore the opportunities AI presents to business, government and society. Through a series of talks, panel discussions and Q&A sessions our experts will explore the high-stakes decisions and questions necessary to create and implement AI solutions for the real world.

Faculty Affiliates

Our faculty affiliates play an integral role in bringing the impact of AI into every department at Smith.

Distinguished University Professor

mwedel@umd.edu

Associate Professor

lyang1@umd.edu

Associate Professor

kpzhang@umd.edu

Academic Director, Master of Science in Accounting

lzhou@umd.edu

Associate Professor

bozhou@umd.edu

PhD Affiliates

Teaching Innovation and Community Engagement

It’s one thing to say your faculty and curricula are immersed in AI and data-driven decision-making, but it’s another to actually do it. At Smith, we’ve seen the wave of AI coming from a distance, and have been putting much time and energy into preparing to embrace it and help our students and the larger community apply it to their careers and even everyday lives.

Small businesses in Prince George’s County, Maryland, will receive AI training through a partnership between the University of Maryland’s Smith School of Business and the county’s Economic Development Corporation. This innovative program aims to equip entrepreneurs with AI skills for business growth and competitiveness.

The Smith School has joined Deloitte to launch the Deloitte Initiative for AI and Learning (DIAL), to help expand learning and development opportunities for faculty and students across the university’s various colleges.

The Maryland Smith AI for Business Leaders professional certificate program is designed for business leaders who seek to leverage AI/ML to improve their businesses.

AI Initiatives Under Development

Our faculty are busy exploring new ways to bring AI into their teaching and research. Here are a few ways we’re taking a different look at AI and its endless capabilities.

- Developing an AI specialization for the Full-time MBA program

- Building an AI literacy course on how to use generative AI to produce and analyze unstructured financial data like conference call transcripts, press releases, etc.

- Adding ChatGPT as a coding copilot to an ML class

- Planning an AI Day for High School Students at UMD

- Launching an AI Empowerment Program for Small Businesses in Prince George’s County

- Providing an AI for Small Business Open Online Course

- Adding a new course on causal inference at the undergraduate level

STEM-Designated Master’s and MBA Programs

All of the Smith School’s MBA programs and nearly all of its master’s programs are now STEM-designated, recognizing the analytical, data-informed nature of the curriculum. The STEM designation acknowledges the value of the degree for those seeking to work in business analytics and technology-driven enterprises.

Beginning in 2024 our Full-time MBA program will include the unprecedented ability to formally specialize in AI. Learn more about this program and its many options to future-proof your skill set.

Instilling AI Competency in Smith Students

Smith faculty are challenging students to meet the rising tide of AI in various business applications. See what courses students will have the opportunity to take in each of our programs.

- Causal Interference and Data Analytics taught by Evan Starr and David Waguespack

- Digital Marketing taught by Wendy Moe

- The Societal Impact of Artificial Intelligence taught by Roland Rust

- Technology, Society, and the Future of Humanity taught by Anand Anandalingam

- Accounting Information System taught by Jim McKinney

- Data Analytics taught by Radu Lazar and Margret Bjarnadottir

- Auditing Automation and Analytics

- Analytics for Finance & Accounting: Data Structures and Applied AI

- Data Mining and Predictive Analytics

- Enterprise Cloud Computing and Big Data

- Big Data and Artificial Intelligence for Business (OMSBA)

- Enterprise Cloud Computing and Big Data

- Data Mining and Predictive Analytics

- Harnessing AI for Business

Marketing Ethics and Policies